SMB performance tuning. File server performance and available tunings depend on the SMB protocol that is negotiated between each client and the server, and on the deployed file server features. The highest protocol version currently available is SMB 3.1.1 in Windows Server 2016 and Windows 10. Enable automatic tuning to monitor queries and improve workload performance.; 5 minutes to read; In this article. Azure SQL Database is an automatically managed data service that constantly monitors your queries and identifies the action that you can perform to. Windows Vista includes the Receive Window Auto-Tuning feature which improves performance for programs that receive TCP data over a network. However, this feature is disabled by default for programs that use the Windows HTTP Services (WinHTTP) interface.

-->Applies to: Windows Server 2019, Windows Server 2016, Windows Server (Semi-Annual Channel)

Use the information in this topic to tune the performance network adapters for computers that are running Windows Server 2016 and later versions. If your network adapters provide tuning options, you can use these options to optimize network throughput and resource usage.

The correct tuning settings for your network adapters depend on the following variables:

- The network adapter and its feature set

- The type of workload that the server performs

- The server hardware and software resources

- Your performance goals for the server

The following sections describe some of your performance tuning options.

Enabling offload features

Turning on network adapter offload features is usually beneficial. However, the network adapter might not be powerful enough to handle the offload capabilities with high throughput.

Important

Do not use the offload features IPsec Task Offload or TCP Chimney Offload. These technologies are deprecated in Windows Server 2016, and might adversely affect server and networking performance. In addition, these technologies might not be supported by Microsoft in the future.

For example, consider a network adapter that has limited hardware resources.In that case, enabling segmentation offload features might reduce the maximum sustainable throughput of the adapter. However, if the reduced throughput is acceptable, you should go ahead an enable the segmentation offload features.

Note

Some network adapters require you to enable offload features independently for the send and receive paths.

Enabling receive-side scaling (RSS) for web servers

RSS can improve web scalability and performance when there are fewer network adapters than logical processors on the server. When all the web traffic is going through the RSS-capable network adapters, the server can process incoming web requests from different connections simultaneously across different CPUs.

Important

Avoid using both non-RSS network adapters and RSS-capable network adapters on the same server. Because of the load distribution logic in RSS and Hypertext Transfer Protocol (HTTP), performance might be severely degraded if a non-RSS-capable network adapter accepts web traffic on a server that has one or more RSS-capable network adapters. In this circumstance, you should use RSS-capable network adapters or disable RSS on the network adapter properties Advanced Properties tab.

To determine whether a network adapter is RSS-capable, you can view the RSS information on the network adapter properties Advanced Properties tab.

RSS Profiles and RSS Queues

The default RSS predefined profile is NUMAStatic, which differs from the default that the previous versions of Windows used. Before you start using RSS profiles, review the available profiles to understand when they are beneficial and how they apply to your network environment and hardware.

For example, if you open Task Manager and review the logical processors on your server, and they seem to be underutilized for receive traffic, you can try increasing the number of RSS queues from the default of two to the maximum that your network adapter supports. Your network adapter might have options to change the number of RSS queues as part of the driver.

Increasing network adapter resources

For network adapters that allow you to manually configure resources such as receive and send buffers, you should increase the allocated resources.

Some network adapters set their receive buffers low to conserve allocated memory from the host. The low value results in dropped packets and decreased performance. Therefore, for receive-intensive scenarios, we recommend that you increase the receive buffer value to the maximum.

Note

If a network adapter does not expose manual resource configuration, either it dynamically configures the resources, or the resources are set to a fixed value that cannot be changed.

Enabling interrupt moderation

To control interrupt moderation, some network adapters expose different interrupt moderation levels, different buffer coalescing parameters (sometimes separately for send and receive buffers), or both.

You should consider interrupt moderation for CPU-bound workloads. When using interrupt moderation, consider the trade-off between the host CPU savings and latency versus the increased host CPU savings because of more interrupts and less latency. If the network adapter does not perform interrupt moderation, but it does expose buffer coalescing, you can improve performance by increasing the number of coalesced buffers to allow more buffers per send or receive.

Performance tuning for low-latency packet processing

Many network adapters provide options to optimize operating system-induced latency. Latency is the elapsed time between the network driver processing an incoming packet and the network driver sending the packet back. This time is usually measured in microseconds. For comparison, the transmission time for packet transmissions over long distances is usually measured in milliseconds (an order of magnitude larger). This tuning will not reduce the time a packet spends in transit.

Following are some performance tuning suggestions for microsecond-sensitive networks.

Set the computer BIOS to High Performance, with C-states disabled. However, note that this is system and BIOS dependent, and some systems will provide higher performance if the operating system controls power management. You can check and adjust your power management settings from Settings or by using the powercfg command. For more information, see Powercfg Command-Line Options.

Set the operating system power management profile to High Performance System.

Note

This setting does not work properly if the system BIOS has been set to disable operating system control of power management.

Enable static offloads. For example, enable the UDP Checksums, TCP Checksums, and Send Large Offload (LSO) settings.

If the traffic is multi-streamed, such as when receiving high-volume multicast traffic, enable RSS.

Disable the Interrupt Moderation setting for network card drivers that require the lowest possible latency. Remember, this configuration can use more CPU time and it represents a tradeoff.

Handle network adapter interrupts and DPCs on a core processor that shares CPU cache with the core that is being used by the program (user thread) that is handling the packet. CPU affinity tuning can be used to direct a process to certain logical processors in conjunction with RSS configuration to accomplish this. Using the same core for the interrupt, DPC, and user mode thread exhibits worse performance as load increases because the ISR, DPC, and thread contend for the use of the core.

System management interrupts

Many hardware systems use System Management Interrupts (SMI) for a variety of maintenance functions, such as reporting error correction code (ECC) memory errors, maintaining legacy USB compatibility, controlling the fan, and managing BIOS-controlled power settings.

The SMI is the highest-priority interrupt on the system, and places the CPU in a management mode. This mode preempts all other activity while SMI runs an interrupt service routine, typically contained in BIOS.

Unfortunately, this behavior can result in latency spikes of 100 microseconds or more.

If you need to achieve the lowest latency, you should request a BIOS version from your hardware provider that reduces SMIs to the lowest degree possible. These BIOS versions are frequently referred to as 'low latency BIOS' or 'SMI free BIOS.' In some cases, it is not possible for a hardware platform to eliminate SMI activity altogether because it is used to control essential functions (for example, cooling fans).

Note

The operating system cannot control SMIs because the logical processor is running in a special maintenance mode, which prevents operating system intervention.

Performance tuning TCP

Auto Tuning Games

You can use the following items to tune TCP performance.

TCP receive window autotuning

In Windows Vista, Windows Server 2008, and later versions of Windows, the Windows network stack uses a feature that is named TCP receive window autotuning level to negotiate the TCP receive window size. This feature can negotiate a defined receive window size for every TCP communication during the TCP Handshake.

In earlier versions of Windows, the Windows network stack used a fixed-size receive window (65,535 bytes) that limited the overall potential throughput for connections. The total achievable throughput of TCP connections could limit network usage scenarios. TCP receive window autotuning enables these scenarios to fully use the network.

For a TCP receive window that has a particular size, you can use the following equation to calculate the total throughput of a single connection.

Total achievable throughput in bytes = TCP receive window size in bytes * (1 / connection latency in seconds)

For example, for a connection that has a latency of 10 ms, the total achievable throughput is only 51 Mbps. This value is reasonable for a large corporate network infrastructure. However, by using autotuning to adjust the receive window, the connection can achieve the full line rate of a 1-Gbps connection.

Some applications define the size of the TCP receive window. If the application does not define the receive window size, the link speed determines the size as follows:

- Less than 1 megabit per second (Mbps): 8 kilobytes (KB)

- 1 Mbps to 100 Mbps: 17 KB

- 100 Mbps to 10 gigabits per second (Gbps): 64 KB

- 10 Gbps or faster: 128 KB

For example, on a computer that has a 1-Gbps network adapter installed, the window size should be 64 KB.

This feature also makes full use of other features to improve network performance. These features include the rest of the TCP options that are defined in RFC 1323. By using these features, Windows-based computers can negotiate TCP receive window sizes that are smaller but are scaled at a defined value, depending on the configuration. This behavior the sizes easier to handle for networking devices.

Note

You may experience an issue in which the network device is not compliant with the TCP window scale option, as defined in RFC 1323 and, therefore, doesn't support the scale factor. In such cases, refer to this KB 934430, Network connectivity fails when you try to use Windows Vista behind a firewall device or contact the Support team for your network device vendor.

Review and configure TCP receive window autotuning level

You can use either netsh commands or Windows PowerShell cmdlets to review or modify the TCP receive window autotuning level.

Note

Unlike in versions of Windows that pre-date Windows 10 or Windows Server 2019, you can no longer use the registry to configure the TCP receive window size. For more information about the deprecated settings, see Deprecated TCP parameters.

Note

For detailed information about the available autotuning levels, see Autotuning levels.

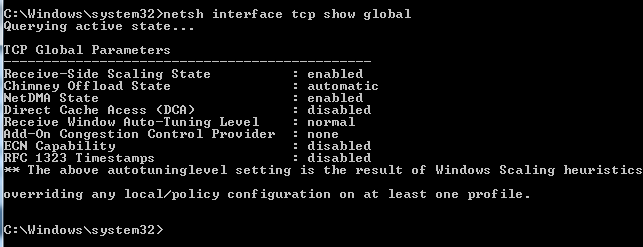

To use netsh to review or modify the autotuning level

To review the current settings, open a Command Prompt window and run the following command:

The output of this command should resemble the following:

To modify the setting, run the following command at the command prompt:

Note

In the preceding command, <Value> represents the new value for the auto tuning level.

For more information about this command, see Netsh commands for Interface Transmission Control Protocol.

To use Powershell to review or modify the autotuning level

To review the current settings, open a PowerShell window and run the following cmdlet.

The output of this cmdlet should resemble the following.

To modify the setting, run the following cmdlet at the PowerShell command prompt.

Note

In the preceding command, <Value> represents the new value for the auto tuning level.

For more information about these cmdlets, see the following articles:

Autotuning levels

You can set receive window autotuning to any of five levels. The default level is Normal. The following table describes the levels.

| Level | Hexadecimal value | Comments |

|---|---|---|

| Normal (default) | 0x8 (scale factor of 8) | Set the TCP receive window to grow to accommodate almost all scenarios. |

| Disabled | No scale factor available | Set the TCP receive window at its default value. |

| Restricted | 0x4 (scale factor of 4) | Set the TCP receive window to grow beyond its default value, but limit such growth in some scenarios. |

| Highly Restricted | 0x2 (scale factor of 2) | Set the TCP receive window to grow beyond its default value, but do so very conservatively. |

| Experimental | 0xE (scale factor of 14) | Set the TCP receive window to grow to accommodate extreme scenarios. |

If you use an application to capture network packets, the application should report data that resembles the following for different window autotuning level settings.

Autotuning level: Normal (default state)

Autotuning level: Disabled

Autotuning level: Restricted

Autotuning level: Highly restricted

Autotuning level: Experimental

Deprecated TCP parameters

The following registry settings from Windows Server 2003 are no longer supported, and are ignored in later versions.

- TcpWindowSize

- NumTcbTablePartitions

- MaxHashTableSize

Auto Tuning Windows 10

All of these settings were located in the following registry subkey:

HKEY_LOCAL_MACHINESystemCurrentControlSetServicesTcpipParameters

Windows Filtering Platform

Windows Vista and Windows Server 2008 introduced the Windows Filtering Platform (WFP). WFP provides APIs to non-Microsoft independent software vendors (ISVs) to create packet processing filters. Examples include firewall and antivirus software.

Note

A poorly-written WFP filter can significantly decrease a server's networking performance. For more information, see Porting Packet-Processing Drivers and Apps to WFP in the Windows Dev Center.

For links to all topics in this guide, see Network Subsystem Performance Tuning.

-->SMB configuration considerations

Do not enable any services or features that your file server and clients do not require. These might include SMB signing, client-side caching, file system mini-filters, search service, scheduled tasks, NTFS encryption, NTFS compression, IPSEC, firewall filters, Teredo, and SMB encryption.

Ensure that the BIOS and operating system power management modes are set as needed, which might include High Performance mode or altered C-State. Ensure that the latest, most resilient, and fastest storage and networking device drivers are installed.

Copying files is a common operation performed on a file server. Windows Server has several built-in file copy utilities that you can run by using a command prompt. Robocopy is recommended. Introduced in Windows Server 2008 R2, the /mt option of Robocopy can significantly improve speed on remote file transfers by using multiple threads when copying multiple small files. We also recommend using the /log option to reduce console output by redirecting logs to a NUL device or to a file. When you use Xcopy, we recommend adding the /q and /k options to your existing parameters. The former option reduces CPU overhead by reducing console output and the latter reduces network traffic.

SMB performance tuning

File server performance and available tunings depend on the SMB protocol that is negotiated between each client and the server, and on the deployed file server features. The highest protocol version currently available is SMB 3.1.1 in Windows Server 2016 and Windows 10. You can check which version of SMB is in use on your network by using Windows PowerShell Get-SMBConnection on clients and Get-SMBSession | FL on servers.

SMB 3.0 protocol family

SMB 3.0 was introduced in Windows Server 2012 and further enhanced in Windows Server 2012 R2 (SMB 3.02) and Windows Server 2016 (SMB 3.1.1). This version introduced technologies that may significantly improve performance and availability of the file server. For more info, see SMB in Windows Server 2012 and 2012 R2 2012 and What's new in SMB 3.1.1.

SMB Direct

SMB Direct introduced the ability to use RDMA network interfaces for high throughput with low latency and low CPU utilization.

Whenever SMB detects an RDMA-capable network, it automatically tries to use the RDMA capability. However, if for any reason the SMB client fails to connect using the RDMA path, it will simply continue to use TCP/IP connections instead. All RDMA interfaces that are compatible with SMB Direct are required to also implement a TCP/IP stack, and SMB Multichannel is aware of that.

SMB Direct is not required in any SMB configuration, but it' s always recommended for those who want lower latency and lower CPU utilization.

For more info about SMB Direct, see Improve Performance of a File Server with SMB Direct.

SMB Multichannel

SMB Multichannel allows file servers to use multiple network connections simultaneously and provides increased throughput.

For more info about SMB Multichannel, see Deploy SMB Multichannel.

SMB Scale-Out

SMB Scale-out allows SMB 3.0 in a cluster configuration to show a share in all nodes of a cluster. This active/active configuration makes it possible to scale file server clusters further, without a complex configuration with multiple volumes, shares and cluster resources. The maximum share bandwidth is the total bandwidth of all file server cluster nodes. The total bandwidth is no longer limited by the bandwidth of a single cluster node, but rather depends on the capability of the backing storage system. You can increase the total bandwidth by adding nodes.

For more info about SMB Scale-Out, see Scale-Out File Server for Application Data Overview and the blog post To scale out or not to scale out, that is the question.

Performance counters for SMB 3.0

The following SMB performance counters were introduced in Windows Server 2012, and they are considered a base set of counters when you monitor the resource usage of SMB 2 and higher versions. Log the performance counters to a local, raw (.blg) performance counter log. It is less expensive to collect all instances by using the wildcard character (*), and then extract particular instances during post-processing by using Relog.exe.

SMB Client Shares

These counters display information about file shares on the server that are being accessed by a client that is using SMB 2.0 or higher versions.

If you' re familiar with the regular disk counters in Windows, you might notice a certain resemblance. That' s not by accident. The SMB client shares performance counters were designed to exactly match the disk counters. This way you can easily reuse any guidance on application disk performance tuning you currently have. For more info about counter mapping, see Per share client performance counters blog.

SMB Server Shares

These counters display information about the SMB 2.0 or higher file shares on the server.

SMB Server Sessions

These counters display information about SMB server sessions that are using SMB 2.0 or higher.

Turning on counters on server side (server shares or server sessions) may have significant performance impact for high IO workloads.

Resume Key Filter

These counters display information about the Resume Key Filter.

SMB Direct Connection

These counters measure different aspects of connection activity. A computer can have multiple SMB Direct connections. The SMB Direct Connection counters represent each connection as a pair of IP addresses and ports, where the first IP address and port represent the connection's local endpoint, and the second IP address and port represent the connection's remote endpoint.

Physical Disk, SMB, CSV FS performance counters relationships

For more info on how Physical Disk, SMB, and CSV FS (file system) counters are related, see the following blog post: Cluster Shared Volume Performance Counters.

Tuning parameters for SMB file servers

Windows 7 Auto Tuning Status Check

The following REG_DWORD registry settings can affect the performance of SMB file servers:

Smb2CreditsMin and Smb2CreditsMax

The defaults are 512 and 8192, respectively. These parameters allow the server to throttle client operation concurrency dynamically within the specified boundaries. Some clients might achieve increased throughput with higher concurrency limits, for example, copying files over high-bandwidth, high-latency links.

Tip

Prior to Windows 10 and Windows Server 2016, the number of credits granted to the client varied dynamically between Smb2CreditsMin and Smb2CreditsMax based on an algorithm that attempted to determine the optimal number of credits to grant based on network latency and credit usage. In Windows 10 and Windows Server 2016, the SMB server was changed to unconditionally grant credits upon request up to the configured maximum number of credits. As part of this change, the credit throttling mechanism, which reduces the size of each connection's credit window when the server is under memory pressure, was removed. The kernel's low memory event that triggered throttling is only signaled when the server is so low on memory (< a few MB) as to be useless. Since the server no longer shrinks credit windows the Smb2CreditsMin setting is no longer necessary and is now ignored.

You can monitor SMB Client SharesCredit Stalls /Sec to see if there are any issues with credits.

AdditionalCriticalWorkerThreads

The default is 0, which means that no additional critical kernel worker threads are added. This value affects the number of threads that the file system cache uses for read-ahead and write-behind requests. Raising this value can allow for more queued I/O in the storage subsystem, and it can improve I/O performance, particularly on systems with many logical processors and powerful storage hardware.

Tip

The value may need to be increased if the amount of cache manager dirty data (performance counter CacheDirty Pages) is growing to consume a large portion (over ~25%) of memory or if the system is doing lots of synchronous read I/Os.

MaxThreadsPerQueue

The default is 20. Increasing this value raises the number of threads that the file server can use to service concurrent requests. When a large number of active connections need to be serviced, and hardware resources, such as storage bandwidth, are sufficient, increasing the value can improve server scalability, performance, and response times.

Tip

An indication that the value may need to be increased is if the SMB2 work queues are growing very large (performance counter ‘Server Work QueuesQueue LengthSMB2 NonBlocking *' is consistently above ~100).

Note

In Windows 10 and Windows Server 2016, MaxThreadsPerQueue is unavailable. The number of threads for a thread pool will be '20 * the number of processors in a NUMA node'.

AsynchronousCredits

The default is 512. This parameter limits the number of concurrent asynchronous SMB commands that are allowed on a single connection. Some cases (such as when there is a front-end server with a back-end IIS server) require a large amount of concurrency (for file change notification requests, in particular). The value of this entry can be increased to support these cases.

SMB server tuning example

Auto Tuning Shop Cz

The following settings can optimize a computer for file server performance in many cases. The settings are not optimal or appropriate on all computers. You should evaluate the impact of individual settings before applying them.

| Parameter | Value | Default |

|---|---|---|

| AdditionalCriticalWorkerThreads | 64 | 0 |

| MaxThreadsPerQueue | 64 | 20 |

SMB client performance monitor counters

Auto Tuning Shop

For more info about SMB client counters, see Windows Server 2012 File Server Tip: New per-share SMB client performance counters provide great insight.